Generative AI has taken several industries by a storm, including healthcare and medical publishing. While the impact of generative AI on medical publishing is currently unknown, an article published in The Lancet clearly identifies that the experiences of the medical fraternity demonstrate that it may have significant ethical consequences.1

Ethical Implications of Generative AI in Medical Writing and Publishing

The advent of generative AI in medical writing and publishing has brought many opportunities and advances. However, it also raises important ethical concerns that need attention. In this age of data-driven medicine, the ethical implications of generative AI in medical writing are multifaceted.

From privacy and security issues to potential algorithmic biases and the need for accountability and transparency, medical stakeholders must address these complex issues to ensure responsible and beneficial implementation.2 By understanding these ethical implications, we can work toward the responsible and ethical use of generative AI to improve medical writing and publishing while prioritizing equitable treatment for all patients.

Data Privacy and Security Concerns

- Collection and Storage of Sensitive Medical Information: Generative AI models used in medical writing may require access to large amounts of medical data, including patient records, research studies, and clinical databases. The collection and storage of such sensitive information raise concerns regarding patient privacy and confidentiality.3 Proper safeguards must be in place to protect the data from unauthorized access or breaches.

- Potential for Unauthorized Access or Misuse of Data: The use of generative AI in medical writing introduces the risk of unauthorized access or misuse of patient data. This can include data breaches, hacking attempts, or improper use of data by malicious agents. Robust security measures and adherence to data protection regulations, such as HIPAA (Health Insurance Portability and Accountability Act), are essential to mitigate these risks.4

Algorithmic Bias and Fairness

- Impact of Biased Training Data on AI-Generated Content: Generative AI models are trained on large datasets, and if these datasets contain biased or misrepresented information, the generated content may also exhibit these biases. In medical writing, biased content can have serious consequences, leading to incorrect diagnoses, disparities in treatment recommendations, or spreading of stereotypes.5 It is crucial to ensure that training data is diverse, representative, and free from inherent biases.

- Ensuring Fairness and Inclusivity in AI-Generated Medical Writing: Generative AI models must be designed and trained to produce content that is fair, inclusive, and unbiased. This includes considering factors such as race, gender, and socioeconomic status when generating medical content to avoid perpetuating existing disparities. Regular audits, monitoring, and continuous improvement of the models can help mitigate bias and promote fairness.

Accountability and Transparency

- Responsibility for Errors or Inaccuracies in AI-Generated Content: When AI systems generate medical content, questions of responsibility arise. Who is accountable for errors or inaccuracies in AI-generated content? The discussion document by COPE (Committee on Publication Ethics) presents a comprehensive overview of the implications of incorporating artificial intelligence (AI) into decision-making processes in the publication domain.6 It highlights the need to carefully consider the challenges and benefits associated with AI solutions, while providing valuable recommendations on how to establish and uphold best practices in this context. While the responsibility ultimately lies with the organization or individuals using the technology, it is essential to establish clear protocols for validation and human oversight to ensure the accuracy and reliability of the generated content.

- Need for Transparency in Disclosing AI-Generated Content to Readers: Readers of medical content have the right to know whether the information they are consuming is generated by AI systems. Transparent disclosure is necessary to maintain trust and enable readers to assess the reliability of the content.6 Clear indications, such as disclaimers or labelling, can help readers differentiate between human-authored and AI-generated content.

Addressing these ethical implications requires collaboration among medical professionals, regulators, and policymakers. By proactively addressing these concerns, the ethical use of generative AI in medical writing can be upheld while maximizing its potential benefits.7

Balancing Autonomy and Human Involvement in Medical Writing and Publishing

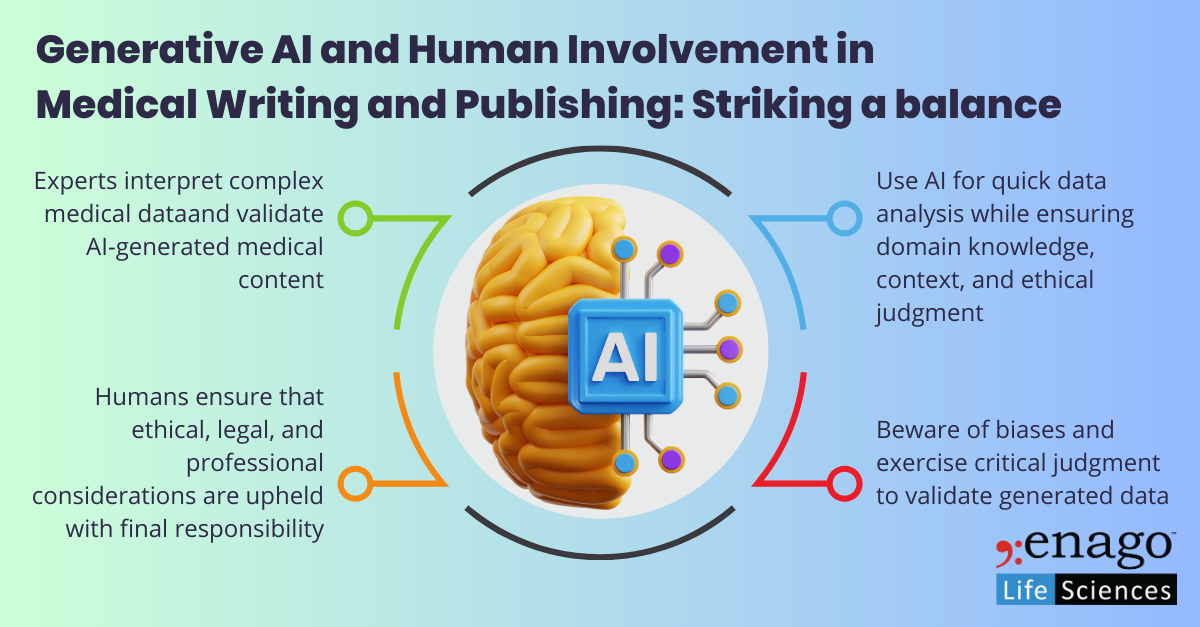

While generative AI systems have shown remarkable capabilities in automating certain aspects of medical writing, it is essential to recognize the indispensable role of human experts in this field. Understanding and navigating this delicate balance are essential to optimize the benefits of generative AI while upholding the integrity, accuracy, and ethical standards of medical writing.

- Expertise and Judgment in Interpreting Medical Information: Human experts, such as medical professionals and researchers, possess specialized knowledge and experience in interpreting complex medical information. They can understand the context, nuances, and implications of medical data, which is essential for accurate and reliable medical writing. Human intervention brings unique expertise to the table when reviewing, validating, and contextualizing AI-generated content, ensuring its accuracy and appropriateness.

- Ensuring Human Oversight and Accountability in AI-Generated Content: While generative AI can automate certain aspects of medical writing, human oversight is crucial to maintain accountability. Human experts play a vital role in ensuring that AI-generated content aligns with ethical standards, guidelines, and legal regulations.8 They are responsible for identifying and rectifying any errors or misleading information that may arise from AI-generated content. Human involvement ensures that ethical, legal, and professional considerations are upheld, and the final responsibility for the content remains with human experts.

- Recognizing the Limitations and Biases of AI Systems: AI systems lack human-like intuition, contextual understanding, and ethical reasoning.9 Moreover, they can be prone to biases based on the data they are trained on. Human experts must be aware of these limitations and biases when utilizing AI-generated content, and they should exercise critical judgment to validate and supplement the generated information as needed.

- Leveraging AI as a Tool to Augment Human Capabilities Rather than Replacing Them: The goal should be to strike a balance between automation and human involvement in medical writing. AI can serve as a powerful tool to assist and augment human capabilities, rather than replacing human expertise entirely. By leveraging AI, human experts can benefit from increased efficiency, data analysis, and content generation, while also providing the necessary domain knowledge, context, and ethical judgment that AI systems lack.10 This collaborative approach ensures that the strengths of both AI and human experts are utilized effectively, resulting in high-quality and trustworthy medical writing.

It is important to recognize that the relationship between AI and human involvement in medical writing is dynamic and evolving. As AI technology progresses, it is crucial to continually assess the impact and implications of AI-generated content and ensure that human expertise remains an integral part of the process. By maintaining a balance between automation and human involvement, we can harness the potential of generative AI while upholding the ethical standards and ensuring the delivery of accurate and reliable medical information.

References:

- Liebrenz, Michael, Roman Schleifer, Anna Buadze, Dinesh Bhugra, and Alexander Smith. 2023. “Generating Scholarly Content with ChatGPT: Ethical Challenges for Medical Publishing.” The Lancet Digital Health5 (3). https://doi.org/10.1016/s2589-7500(23)00019-5.

- Naik, Nithesh, B. M. Zeeshan Hameed, Dasharathraj K. Shetty, Dishant Swain, Milap Shah, Rahul Paul, Kaivalya Aggarwal, et al. 2022. “Legal and Ethical Consideration in Artificial Intelligence in Healthcare: Who Takes Responsibility?” Frontiers in Surgery9 (862322): 862322. https://doi.org/10.3389/fsurg.2022.862322.

- Farhud, Dariush D., and Shaghayegh Zokaei. 2021. “Ethical Issues of Artificial Intelligence in Medicine and Healthcare.” Iranian Journal of Public Health50 (11). https://doi.org/10.18502/ijph.v50i11.7600.

- 2022. “Health Insurance Portability and Accountability Act of 1996 (HIPAA).” Centers for Disease Control and Prevention. June 27, 2022. https://www.cdc.gov/phlp/publications/topic/hipaa.html.

- Zohny, Hazem, John McMillan, and Mike King. 2023. “Ethics of Generative AI.” Journal of Medical Ethics49 (2): 79–80. https://doi.org/10.1136/jme-2023-108909.

- “Artificial Intelligence (AI) in Decision Making.” n.d. COPE: Committee on Publication Ethics. https://publicationethics.org/node/50766.

- Harrer, Stefan. 2023. “Attention Is Not All You Need: The Complicated Case of Ethically Using Large Language Models in Healthcare and Medicine.” EBioMedicine90 (April): 104512. https://doi.org/10.1016/j.ebiom.2023.104512.

- “Recommendation on the Ethics of Artificial Intelligence | UNESCO.” 2021. Www.unesco.org. November 23, 2021. https://www.unesco.org/en/legal-affairs/recommendation-ethics-artificial-intelligence.

- McKendrick, Joe, and Andy Thurai. 2022. “AI Isn’t Ready to Make Unsupervised Decisions.” Harvard Business Review. September 15, 2022. https://hbr.org/2022/09/ai-isnt-ready-to-make-unsupervised-decisions.

- Bhosale, Uttkarsha. 2023. “ChatGPT’s Limitations in Research.” Enago Academy. June 5, 2023. https://www.enago.com/academy/chatgpt-cannot-do-for-researchers-2/.

Author:

Uttkarsha Bhosale

Editor, Enago Academy

Medical Writer, Enago Life Sciences

Connect with Uttkarsha on LinkedIn

I needed to thank you for this very good read!! I absolutely enjoyed every little bit of it.

I’ve got you book marked to check out new things you post…