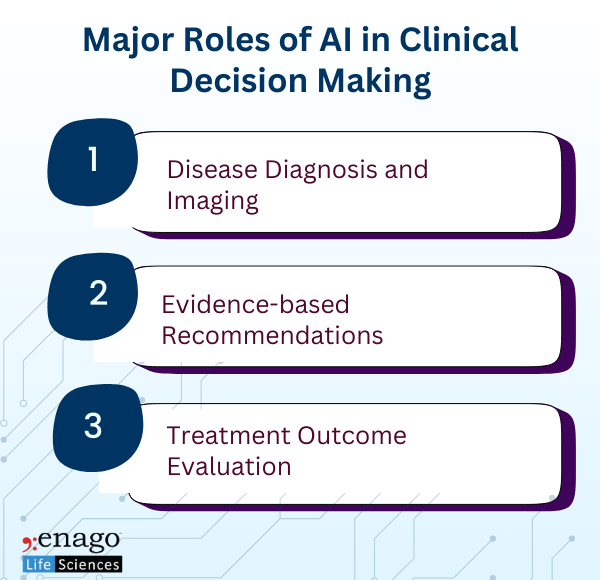

Several studies have reported the potential of AI and Machine Learning (ML) models in enhancing the clinical decision-making process. Their integration in the healthcare systems not only strengthens the decision-making process but also provides timely insights that boosts the overall efficiency of the clinical systems.

Although these systems can improve the clinical processes, the ethical risks associated with them cannot be ignored. This makes it essential to address the ethical considerations to minimize potential harms and maximize benefits of AI augmentation.

Ethical Considerations While Using AI in Clinical Decision-Making

Understanding the ethical considerations in AI-driven clinical decision-making guide its responsible integration with human expertise, ultimately boosting the overall efficacy of the healthcare system. Here are some ethical considerations associated with the use of AI in the clinical decision-making process.

1. Patient Autonomy and Consent

Patient autonomy and consent is an important factor in medical ethics. An empirical study, investigating the complex interplay between AI and patient autonomy identified trust, ethical concerns, and privacy risks as pivotal factors influencing patient autonomy in AI-enabled healthcare decision-making. Furthermore, the complexity of AI systems can make it challenging for patients to understand the process of decision-making.

2. Bias and Fairness

AI can inherit biases from its training data, potentially leading to unfair treatment of the patients based on their race, gender, region, socioeconomic status, and other factors. Additionally, AI systems may generalize certain factors and fail to account for the nuances of specific clinical conditions. As a result, directly introducing biased AI into healthcare, increasing the risk of discrimination among the patients, leading to unfair treatment suggestions and subsequent outcomes.

3. Accountability and Liability

Determining accountability of the AI-driven decisions or recommendations can be complex. In AI-driven recommendation, it can be challenging to assess the degree of accountability of the human clinicians. Traditional medical practice places responsibility on healthcare providers, but with AI, this responsibility may be distributed among developers, clinicians, and the institutions using the technology.

4. Privacy and Data Security

The use of AI in clinical decision-making often requires access to large amounts of sensitive patient data. Protecting patient data involves implementing robust cybersecurity measures and adhering to regulations on data usage, storage, and sharing. Furthermore, the “black box” nature of many AI algorithms makes it difficult for clinicians and patients to understand how these systems arrive at their recommendations, which is crucial for explaining and justifying the suggestions provided by the tool.

5. Clinical Effectiveness and Safety

AI tools must be rigorously validated for clinical effectiveness and safety before being integrated into practice. This involves conducting thorough clinical trials, continuous performance monitoring, and post-market surveillance. Ensuring that AI systems are safe and effective is essential to protect patient health and maintain confidence in AI-driven clinical decision-making.

6. Equitable Access

The growing prevalence of AI in healthcare industry necessitates ensuring equitable access to these technologies. Disparities in access to AI-driven healthcare could exacerbate existing health inequities.

AI should augment, not replace, human clinicians. Beyond individual ethical concerns, there is a broader imperative to use AI ethically within healthcare systems. This includes prioritizing patient welfare, ensuring equitable access to AI-driven tools, and fostering an inclusive approach to AI development. Ethical use of AI also requires ongoing ethical reflection and engagement with diverse stakeholders, including patients, clinicians, ethicists, and policymakers.

The integration of AI into clinical decision-making holds great promise for improving healthcare outcomes. However, it also introduces complex ethical challenges that require careful consideration and proactive management. By addressing these ethical considerations head-on, we can work towards responsible AI augmentation in healthcare while ensuring the compliance of the fundamental principles of medical ethics and patient care.

References:

1. Khosravi, M. et al. (2024) Artificial Intelligence and decision-making in Healthcare: A thematic analysis of a systematic review of reviews, Health services research and managerial epidemiology. Available at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10916499/ (Accessed: 22 November 2024).

2. (No date) Portico. Available at: https://access.portico.org/Portico/auView?auId=ark%3A%2F27927%2Fphw1p05hr2w (Accessed: 22 November 2024).

3. Ramgopal, S. et al. (2022) Artificial Intelligence-based clinical decision support in Pediatrics, Nature News. Available at: https://www.nature.com/articles/s41390-022-02226-1 (Accessed: 22 November 2024).

Author:

Anagha Nair

Editorial Assistant, Enago Academy

Medical Writer, Enago Life Sciences

Connect with Anagha on LinkedIn